Mastering E-Commerce Backend Development with Django: A Comprehensive Guide to Project Setup

Greetings, illustrious developers! As we embark on this fascinating journey to create an e-commerce backend system using Django, there's some essential groundwork we must lay first. Crafting an efficient, secure, and scalable backend requires not just an understanding of Django, but an array of other technologies and tools that come together in a beautiful, polyphonic symphony of code. In this blog post, I will elucidate the crucial components we'll employ and guide you through their installation and configuration

Prerequisites

Before we begin, ensure you have Python 3.x installed. If not, you can download it from the official Python website.

Also, familiarity with the command line and some basic knowledge of Python and Django would be beneficial. The latter is not strictly necessary, for we are about to dive deep into its entrails, revealing the secrets it holds within. For Django Basic, please go through my Django Basic posts and Django Auth

Packages and Tools to Install

Django

The backbone of our e-commerce backend. Django is a robust and versatile framework designed to encourage rapid development and clean, pragmatic design.

Django Rest Framework (DRF)

DRF enhances Django by adding a set of functionalities to build APIs effortlessly. It provides features like serialization and view sets. We will see more of this as we progress in the development.

Black

The uncompromising code formatter. It makes your code look as if it was written by a single person. Trust me, it helps.

Flake8

While Black takes care of formatting, Flake8 watches over the quality of your code. It enforces a style guide and detects issues like syntax errors, undefined variables, and more

Pytest

As proponents of Test-Driven Development (TDD), pytest facilitates simple unit tests as well as complex functional testing.

Elasticsearch

For state-of-the-art search capabilities in our app, Elasticsearch is our weapon of choice. It's scalable, real-time, and inherently distributed by nature. We will set up this with docker and docker-compose.

psycopg2-binary

For interacting with PostgreSQL, the psycopg2-binary package provides the necessary hooks.

drf-yasg

A Swagger generator for DRF. It's like having an eloquent poet describe your APIs in a comprehensible and interactive manner.

Docker and Docker Compose

Our knight in shining armor for ensuring that the application runs consistently across all platforms from Windows to Linux to MacOS. Docker enables us to package an application and its dependencies into a 'container'. This ensures that the application will run uniformly and consistently regardless of where the container is deployed. Thus, the age-old development conundrum, "It works on my machine," is rendered obsolete.

Docker-Compose facilitates the definition and orchestration of multi-container Docker applications. It uses a simple YAML file to configure all of your application's services, networks, and volumes, allowing you to streamline complex setups.

Installing Docker

Windows

For Windows 10 Home: Download Docker Desktop for Windows and follow the installation guide.

For older versions: You might need to use Docker Toolbox.

macOS

- Download Docker Desktop for Mac and follow the installation instructions.

Linux

The installation varies depending on your Linux distribution. For Ubuntu, you can install Docker via the apt package manager

sudo apt update

sudo apt install docker.io

For other distributions, please refer to the official documentation.

Installing Docker-Compose

Docker-Compose can be installed separately but often comes bundled with Docker Desktop on Windows and macOS. For Linux users:

Download the latest version of Docker-Compose

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-composeApply executable permissions to the binary

sudo chmod +x /usr/local/bin/docker-composeVerifying the Installation

After installing both Docker and Docker-Compose, you can verify that they are installed correctly with

docker --version docker-compose --versionIf you see the version numbers, then rejoice! You've successfully installed Docker and Docker-Compose.

Dockerfile and Docker-Compose file

After successfully installing docker and docker-compose, it's time to set up our docker containers for our e-commerce application. We will bring to life the blueprint of our application's architecture by crafting the Dockerfile and Docker-Compose files. For now, we will construct two containers for Django and PostgreSQL and we will create more containers as needed in our development. Let's delve in!

Create a project folder i.e your working directory, I will call mine 'tut', then navigate to the directory

mkdir tut

cd tut

Now create three files i.e Dockerfile, docker-compose.yml and requirements.txt file

A

Dockerfileis a script that contains collections of commands and instructions to create a Docker imageDocker-Compose allows us to define our multi-container application in a single file, and then spin up our application with a single command (

docker-compose up).Requirements.txt file holds all environment dependencies needed by our Django app to install

touch Dockerfile && touch docker-compose.yml && touch requirements.txt

Now open this directory in your favorite code editor, I love VS code, then add the code below to your Dockerfile

# Use an official Python runtime based on Debian 10 "buster" as a parent image

FROM python:3.9-slim-buster

# Environment variables

ENV PYTHONUNBUFFERED 1

# Create and set working directory

WORKDIR /app

# Install system dependencies

RUN apt-get update && apt-get install -y \

gcc \

python3-dev \

musl-dev \

libpq-dev

# Install Python dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy project files into the container currently just three files

COPY . /app/

Open the requirements.txt file and add the lines below

# Web framework for building web applications

Django

# Toolkit for building Web APIs on top of Django

djangorestframework

# Yet Another Swagger Generator: Automated generation of OpenAPI schemas for Django REST Framework

drf-yasg

# Code formatter for enforcing a uniform code style

black

# Tool for measuring code coverage of Python programs

coverage

# Tool for checking Python code against some of the PEP8 style guides

flake8

# PostgreSQL adapter for Python, in its standalone binary form for easier installation

psycopg2-binary

# Library for handling images, used by Django for ImageFields and other image processing tasks

Pillow

# Framework for writing, running, and structuring tests in Python

pytest

# Reads environment variables from a .env file, useful for local development

python-dotenv

Now let's update the docker-compose file

version: '3'

services:

db:

image: postgres:latest

volumes:

- postgres_data:/var/lib/postgresql/data

environment:

POSTGRES_DB: ${DB_NAME}

POSTGRES_USER: ${DB_USER}

POSTGRES_PASSWORD: ${DB_PASSWORD}

web:

build: .

command: ["python", "manage.py", "runserver", "0.0.0.0:8000"]

environment:

DEBUG: ${DEBUG}

SECRET_KEY: ${SECRET_KEY}

volumes:

- .:/app

ports:

- "8000:8000"

depends_on:

- db

volumes:

postgres_data:

In this docker-compose.yml file, we define multiple services:

- web: This is our Django web application. It is built from the Dockerfile in the current directory.

The volume used in the web service allows files created and updated outside the container to take the same effect in the container

- db: This service uses the latest PostgreSQL image and sets some environment variables.

The volume's primary role here is to persist data generated by and used by Docker containers. When a PostgreSQL container initializes, it writes data to

/var/lib/postgresql/data. By using a volume, we ensure that this data is not lost when the container stops or is removed. This is crucial for databases, as you'd typically want to preserve the database files across container restarts or updates.

You will notice in the web service configuration in the docker-compose file, that there are some environment variables that we need to make available in the docker environment. Let's do this by creating a new file '.env' file

touch .env

Open the file and add the content below

# Django project secret key

SECRET_KEY=""

# Debug value to use in the project app: 1 for True 0 for False

DEBUG = 1

# Postgresql environment variable

# dbpassword

DB_PASSWORD = ""

# db name

DB_NAME=""

# db user: this is mostly postgres

DB_USER = "postgres"

#db host: this is the databse host name to be used in django setting,

# it is the database service name use in the docker-compose file

DB_HOST="db"

Now it's time to create the containers, run the command below in the directory with the Dockerfile and docker-compose file

docker-compose up --build -d

docker-compose up --build -d command— This command does a multitude of things, harmonizing the build, launch, and background running of services defined in your docker-compose.yml file. Let's dissect its components to better understand its machinations

docker-compose up

This is the core command for bringing up Docker services as defined in the docker-compose.yml file. When you run this command, Docker Compose will start up all the services defined in the configuration file in the correct order, respecting dependencies. It also does other things like creating networks and volumes if they don't already exist.

--build

This flag is an explicit instruction to Docker Compose to build the images before starting the containers. In a standard development workflow, if the Docker image already exists on your system, docker-compose up would use that image to create a new container.

However, adding --build ensures that your application is running in a container created from the latest build of your image, incorporating any changes you've made to the Dockerfile. It performs a docker-compose build before docker-compose up.

-d

This flag stands for "detached mode." When you run docker-compose up -d, Docker Compose will start your services in the background and leave them running. Without this flag, Docker Compose would start the services and display their log output in your terminal session, effectively tying up your terminal.

By using -d, you're freeing up your terminal while keeping the services running in the background, a commonly preferred setup for running services on a remote server or during development when you don't need to see the log output all the time.

In Summary

So, when you run docker-compose up --build -d, Docker Compose will:

Build the Docker images as per the Dockerfile(s) in your project (because of

--build).Start up all the services defined in

docker-compose.yml.Run everything in the background (because of

-d).

You should have the database up but not the Django container, this is because we have asked the container to run the command below

python manage.py runserver 0.0.0.0:8000

but we don't have manage.py file yet! yes, you guessed right, It's time to create our Django project!!!

Create Django Project

To create our Django project, we will need to spin up a virtual environment since the Django container is down, I use pipenv to create a virtual environment and I will create one as shown below while I am in the directory with docker-compose file

pipenv shell

With your virtual environment activated, it's time to install the requisite Python packages. Use your requirements.txt file to install them all in one fell swoop:

pipenv install -r requirements.txt

Let's commence with the creation of our Django project. The project's name shall be 'ecoms'. By appending a '.' at the end, we instruct Django not to generate an extra enclosing directory, which is especially useful when working with Docker containers.

django-admin startproject ecoms .

Upon successful execution, a new directory named 'ecoms' along with a file named 'manage.py' will materialize in your working directory. These are the initial files that make up a Django project.

Before bringing our new changes into effect, we need to bring down any existing Docker containers. This ensures that no conflicts occur when we launch the new configurations.

docker-compose down

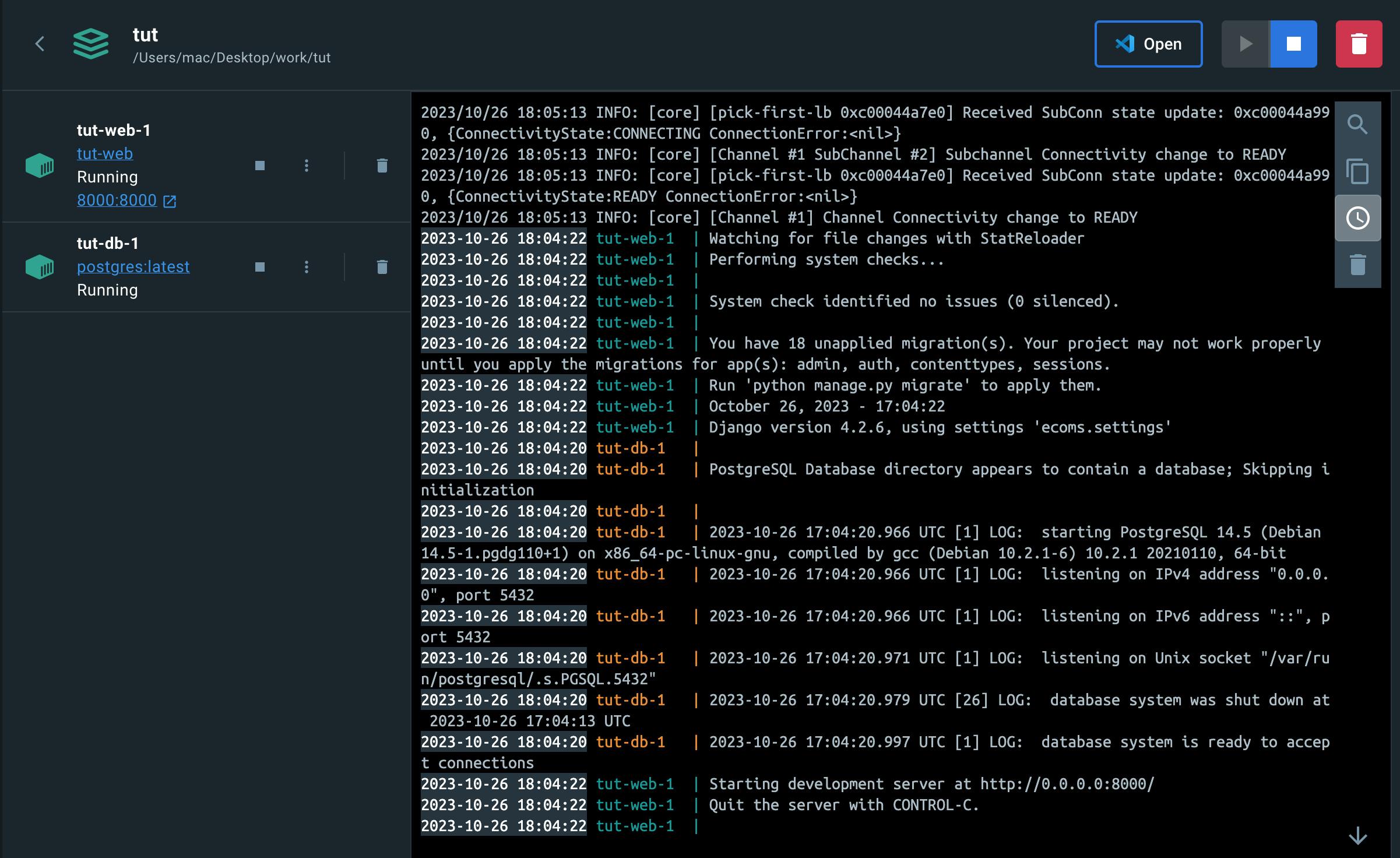

It's the moment of truth! Launch your Docker containers in detached mode. The '-d' flag accomplishes this, letting you continue using the terminal while the containers run in the background.

docker-compose up -d

This time both the database and Django containers should be up and running

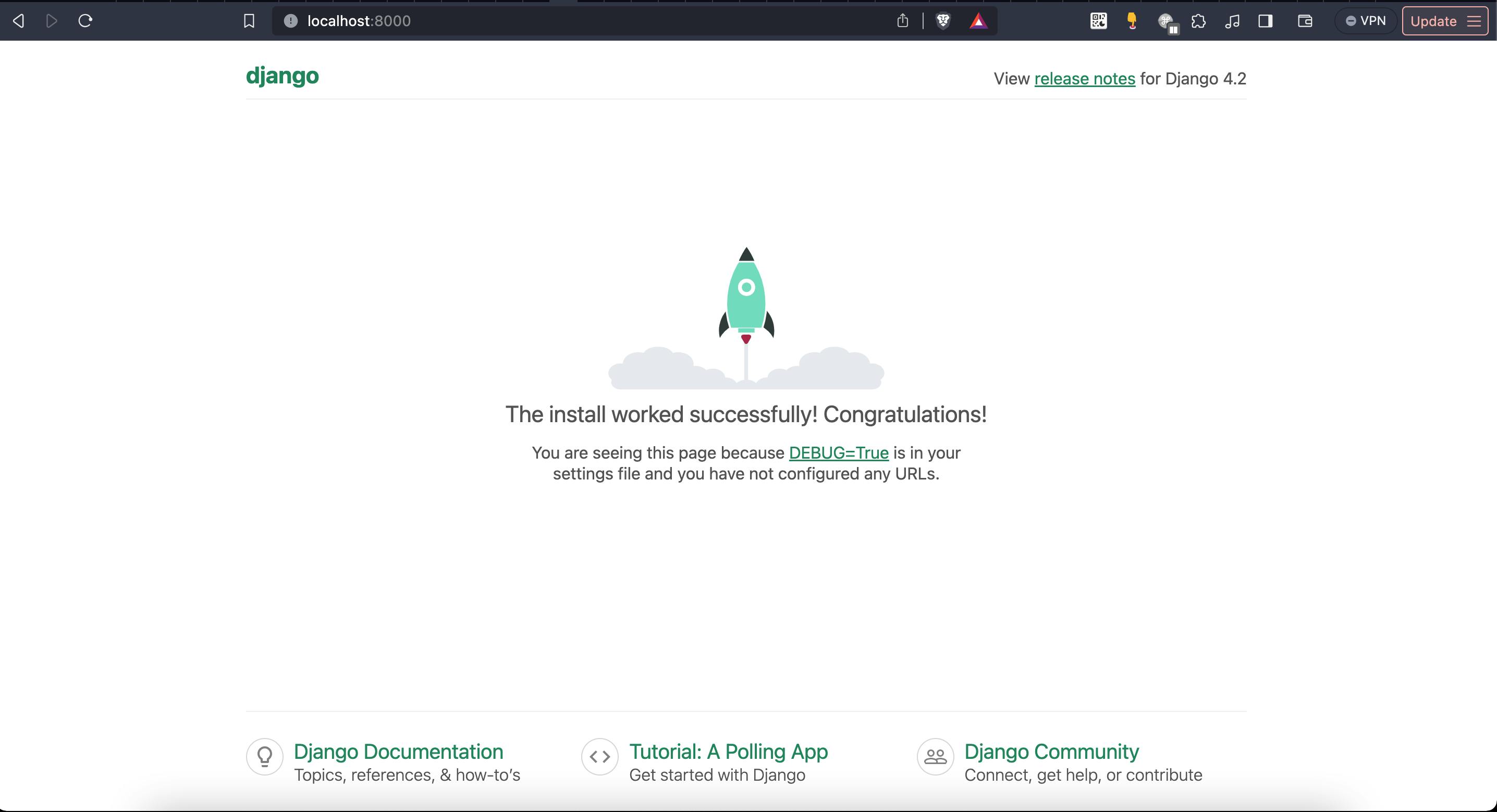

Open your web browser and navigate to localhost:8000. If all goes according to plan, you should be greeted with Django's default welcome page.

Wow, what a journey indeed! Setting up a robust, containerized development environment is a quintessential first step in any ambitious web development project. With Django as our trusted steed and Docker as our sturdy chariot, we've laid the groundwork for a modern, scalable, and above all, professional e-commerce backend.

What Lies Ahead: Authentication and Beyond

In the next enlightening installment of this series, we will delve into one of the cornerstone features of any serious application—the authentication system. Expect to explore topics such as:

JWT (JSON Web Tokens) for secure, stateless authentication

Integrating OAuth providers like Google and Facebook for social logins

Implementing Two-Factor Authentication (2FA) for enhanced security

Customizing Django’s built-in User model to fit the unique needs of an e-commerce platform

We'll dive deep into the code, adhering to best practices, and perhaps even sprinkle some Test-Driven Development (TDD) techniques into the mix.